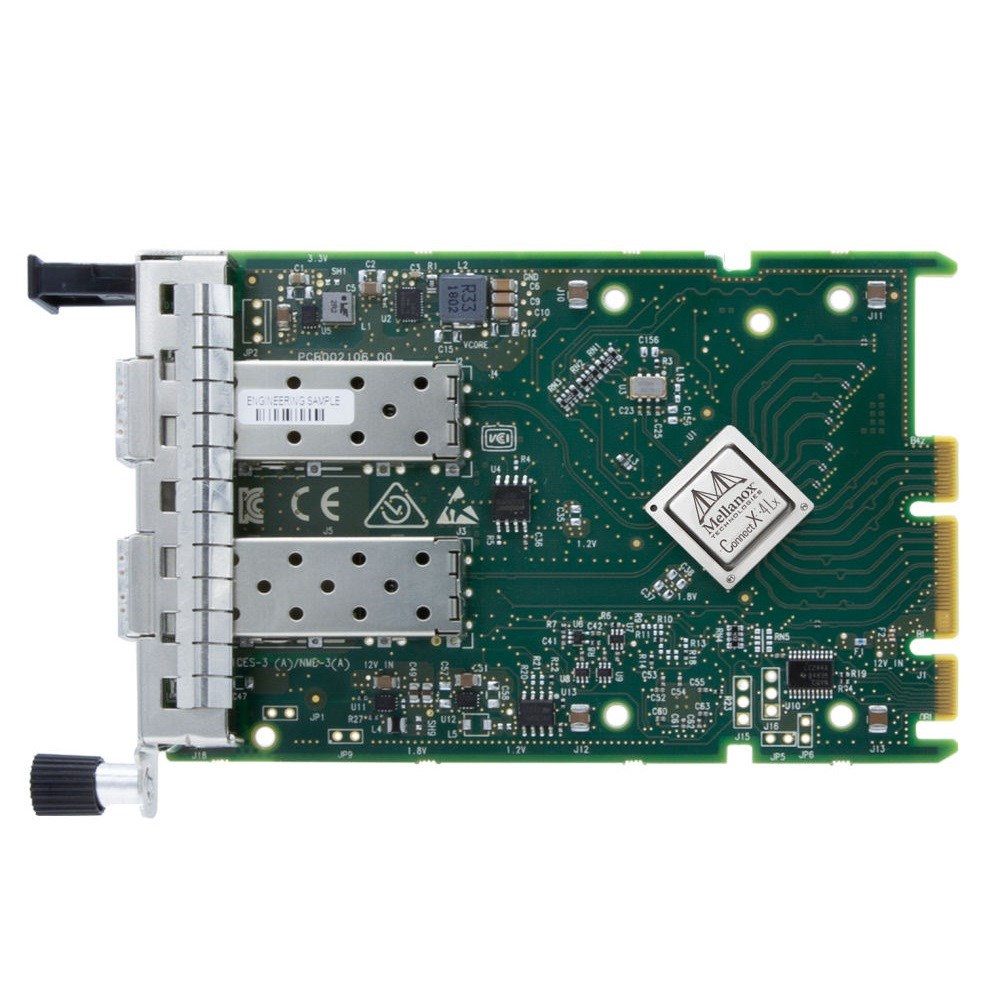

LENOVO 4XC7A08246 ThinkSystem Mellanox ConnectX-4 Lx 10/25GbE SFP28 2-port OCP Ethernet Adapter

Abstract

ConnectX-4 from Mellanox is a family of high-performance and low-latency Ethernet and InfiniBand adapters. The ConnectX-4 Lx EN adapters are available in 40 Gb and 25 Gb Ethernet speeds and the ConnectX-4 Virtual Protocol Interconnect (VPI) adapters support either InfiniBand or Ethernet.

This product guide provides essential presales information to understand the ConnectX-4 offerings and their key features, specifications, and compatibility. This guide is intended for technical specialists, sales specialists, sales engineers, IT architects, and other IT professionals who want to learn more about ConnectX-4 network adapters and consider their use in IT solutions.

Change History

Changes in the January 16, 2021 update:

- 100Gb transceiver 7G17A03539 also supports 40Gb when installed in a Mellanox adapter. - Supported transceivers and cables section

Introduction

ConnectX-4 from Mellanox is a family of high-performance and low-latency Ethernet and InfiniBand adapters. The ConnectX-4 Lx EN adapters are available in 40 Gb and 25 Gb Ethernet speeds and the ConnectX-4 Virtual Protocol Interconnect (VPI) adapters support either InfiniBand or Ethernet.

These adapters address virtualized infrastructure challenges, delivering best-in-class performance to various demanding markets and applications. Providing true hardware-based I/O isolation with unmatched scalability and efficiency, achieving the most cost-effective and flexible solution for Web 2.0, Cloud, data analytics, database, and storage platforms.

The following figure shows the Mellanox ConnectX-4 2x100GbE/EDR IB QSFP28 VPI Adapter (the standard heat sink has been removed in this photo).

Teknik Özellikler

PCIe 3.0 host interface:

- ConnectX-4 Lx Ethernet adapters: PCIe 3.0 x8 interface

- ConnectX-4 EDR InfiniBand / 100 Gb Ethernet adapter: PCIe 3.0 x16 interface

- Support for MSI/MSI-X mechanisms

External connectors:

- 25 Gb PCIe and ML2 adapters: SFP28

- 40 Gb and 100 Gb adapters: QSFP28

Ethernet standards (all adapters, except where noted):

- 25G Ethernet Consortium (25 Gb)

- 25G Ethernet Consortium (50 Gb) (100Gb/EDR adapter only)

- IEEE 802.3bj, 802.3bm 100 Gigabit Ethernet (100Gb/EDR adapter only)

- IEEE 802.3ba 40 Gigabit Ethernet (100Gb/EDR and 40Gb adapters only)

- IEEE 802.3by 25 Gigabit Ethernet

- IEEE 802.3ae 10 Gigabit Ethernet

- IEEE 802.3az Energy Efficient Ethernet

- IEEE 802.3ap based auto-negotiation and KR startup

- Proprietary Ethernet protocols (20/40GBASE-R2) (40Gb adapter only)

- IEEE 802.3ad, 802.1AX Link Aggregation

- IEEE 802.1Q, 802.1P VLAN tags and priority

- IEEE 802.1Qau (QCN) – Congestion Notification

- IEEE 802.1Qaz (ETS)

- IEEE 802.1Qbb (PFC)

- IEEE 802.1Qbg

- IEEE 1588v2

- Jumbo frame support (9.6KB)

- IPv4 (RFQ 791)

- IPv6 (RFC 2460)

InfiniBand protocols (VPI Infiniband adapters only):

- InfiniBand: IBTA v1.3 Auto-Negotiation

- 1X/2X/4X SDR (2.5 Gb/s per lane)

- DDR (5 Gb/s per lane)

- QDR (10 Gb/s per lane)

- FDR10 (10.3125 Gb/s per lane)

- FDR (14.0625 Gb/s per lane) port

- EDR (25.78125 Gb/s per lane)

InfiniBand features (VPI Infiniband adapters only)

- RDMA, Send/Receive semantics

- Hardware-based congestion control

- Atomic operations

- 16 million I/O channels

- 256 to 4Kbyte MTU, 2Gbyte messages

Note: The feature of 8 virtual lanes with VL15 is currently not supported

Enhanced Features

- Hardware-based reliable transport

- Collective operations offloads

- Vector collective operations offloads

- PeerDirect RDMA (GPUDirect communication acceleration)

- 64/66 encoding

- Extended Reliable Connected transport (XRC)

- Dynamically Connected transport (DCT)

- Enhanced Atomic operations

- Advanced memory mapping support, allowing user mode registration and remapping of memory (UMR)

- On demand paging (ODP) – registration free RDMA memory access

Storage Offloads

- RAID offload - erasure coding (Reed-Solomon) offload

- T10 DIF - Signature handover operation at wire speed, for ingress and egress traffic (100Gb/EDR adapter only)

Overlay Networks

- Stateless offloads for overlay networks and tunneling protocols

- Hardware offload of encapsulation and decapsulation of NVGRE and VXLAN overlay networks

Hardware-Based I/O Virtualization

- Single Root IOV (SR-IOV)

- Multi-function per port

- Address translation and protection

- Multiple queues per virtual machine

- Enhanced QoS for vNICs

- VMware NetQueue support

- Windows Hyper-V Virtual Machine Queue (VMQ)

Virtualization

- SR-IOV: Up to 256 Virtual Functions (VFs), 1 Physical Function (PF) per port

- SR-IOV on every Physical Function

- 1K ingress and egress QoS levels

- Guaranteed QoS for VMs

Note: NPAR (NIC partitioning) is currently not supported.

CPU Offloads

- RDMA over Converged Ethernet (RoCE)

- TCP/UDP/IP stateless offload

- LSO, LRO, checksum offload

- RSS (can be done on encapsulated packet), TSS, HDS, VLAN insertion / stripping, Receive flow steering

- Intelligent interrupt coalescence

Remote Boot

- Remote boot over InfiniBand (VPI Infiniband adapters only)

- Remote boot over Ethernet

- Remote boot over iSCSI

- PXE and UEFI

Protocol Support

- OpenMPI, IBM PE, OSU MPI (MVAPICH/2), Intel MPI

- Platform MPI, UPC, Open SHMEM

- TCP/UDP, MPLS, VxLAN, NVGRE, GENEVE

- EoIB, IPoIB, SDP, RDS (VPI Infiniband adapters only)

- iSER, NFS RDMA, SMB Direct

- uDAPL

Management and Control Interfaces

- NC-SI (25Gb ML2 adapter only)

- PLDM over MCTP over PCIe

- SDN management interface for managing the eSwitch

EAN Numarası:

0889488497362

Kişisel Bilgisayarlar

Kişisel Bilgisayarlar

Bilgisayar Parça ve Bileşenleri

Bilgisayar Parça ve Bileşenleri

Baskı ve Çevre Birimleri

Baskı ve Çevre Birimleri

Ofis ve Tüketim Malzemeleri

Ofis ve Tüketim Malzemeleri

Güvenlik Ürünleri

Güvenlik Ürünleri

Kurumsal ve Ağ Ürünleri

Kurumsal ve Ağ Ürünleri

Yazılım

Yazılım

Telefonlar

Telefonlar

Tüketici Elektroniği

Tüketici Elektroniği